Recently, deep learning, a machine learning method that uses multi-layered neural networks, has been attracting attention in various research and industrial fields. In particular, deep convolutional neural networks (DCNNs), which specialize in image recognition, have been remarkable at extracting features from a large amount of data. They have achieved highly significant performance, which is far exceeding that of conventional methods in image classification, object detection, anomaly detection, and image super-resolution. Image super-resolution, which is the processing of converting low-resolution images into high-resolution ones, is performed by learning the corresponding transformation process.

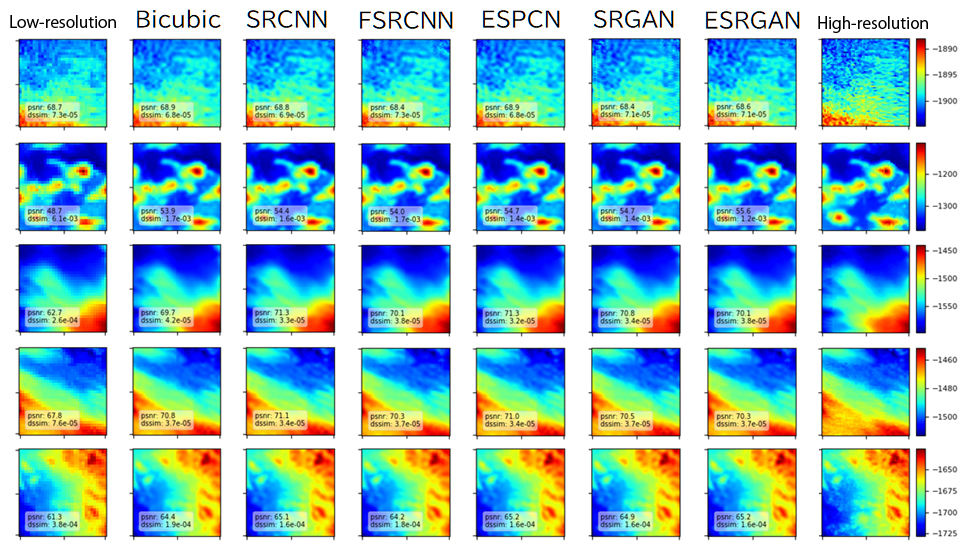

In this research program, we implemented five different DCNN architectures (SRCNN, FSRCNN, ESPCN, SRGAN, and ESRGAN) for image super-resolution and tuned them for the topographic maps of the middle Okinawa Trough acquired by JAMSTEC. The figure below shows an example of super-resolution of a 100-m mesh bathymetry map to a 50-m mesh, for a 3.2-km-square area. The super-resolution results of all architectures showed better accuracy than that of bicubic interpolation, especially in the case of rugged terrain and missing values. We are currently improving the generalization performance and magnification factor of DCNNs, to enable their application to a wider range of areas, as well as developing those which incorporate bathymetric knowledge.